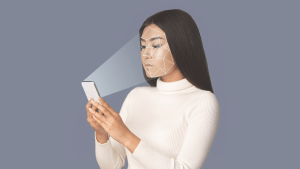

Following the Online Safety Act, X, formerly Twitter, risks repercussions from the regulator Ofcom over reports of its in-house AI creating intimate deepfake images of users without their permission. This illegal content not only misappropriates users’ images but also manipulates them to create sexualised and deeply distressing material disseminated across the internet.

The reports are concerning the Grok AI chatbot account on X which have launched an investigation from Ofcom, particularly to find any evidence of sexual abuse images of children being created.

The impact of these deepfakes could have huge ramifications on a person’s safety and digital footprint. In addition deepfakes target the celebrity world, posing serious safety and reputational risks to public figures.

The safety watchdog said it had contacted X on Monday 5 January and set a strict deadline of 9 January to show what steps X has taken to comply with its duties. Having completed an expedited assessment, X was formally put under an investigation – although platforms own the responsibility to decide if their content breaks UK laws.

AI imagery is now nearly impossible to distinguish from reality and regulators do not have total authority. The ‘wait and see’ approach from regulatory authorities could lead to exponentially increasing rates of cybercrime.

The Online Safety Act sets out the process Ofcom must follow when investigating a company and deciding whether it has failed to comply with its legal obligations.

An Ofcom spokesperson said: “Reports of Grok being used to create and share illegal non-consensual intimate images and child sexual abuse material on X have been deeply concerning. Platforms must protect people in the UK from content that’s illegal in the UK, and we won’t hesitate to investigate where we suspect companies are failing in their duties, especially where there’s a risk of harm to children”.

“We’ll progress this investigation as a matter of the highest priority, while ensuring we follow due process. As the UK’s independent online safety enforcement agency, it’s important we make sure our investigations are legally robust and fairly decided.”